GPU clouds and AI inference platforms are rapidly becoming the backbone of enterprise AI. But customers are no longer evaluating providers on price or raw compute alone.

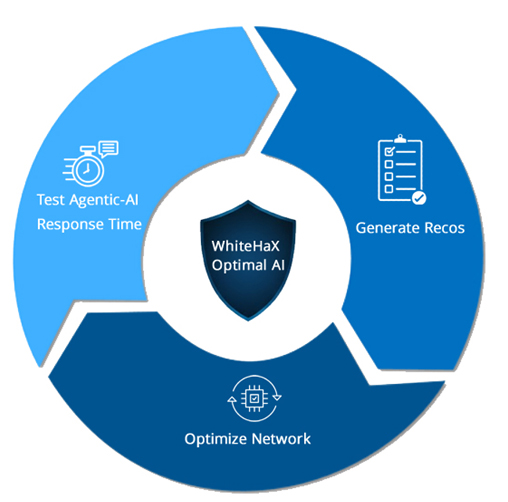

Without AIspecific validation, performance incidents, noisyneighbor effects, and runaway inference costs become your problem—whether caused by customers or attackers. WhiteHaX AI-ASM enables GPU and AI inference providers to turn these risks into competitive advantages.

Why SecureAI + OptimalAI Are Powerful for Hosting Providers

| Customer Demand | What WhiteHaX AI-ASM Delivers | Provider Advantage |

|---|---|---|

| Secure AI workloads | AIspecific attack & abuse testing | Reduced platform risk |

| Predictable latency | Realworld inference performance data | Stronger SLAs |

| Cost transparency | Costamplification & abuse analysis | Lower churn |

| Show need for more Power | Demonstrate how to improve response time and performance | Upsell of more/higher GPUs |

| Enterprise trust | Security + performance evidence | Premium positioning |

How Hosting Providers Can Use WhiteHaX AI-ASM

CustomerFacing Offers

Internal Platform Validation

Why WhiteHaX AI-ASM for GPU & AI Inference Platforms

Bottom Line

Raw GPU capacity is no longer enough. Enterprises want secure, predictable, and costcontrolled AI infrastructure.

WhiteHaX AI-ASM helps GPU and AI inference providers prove it—turning trust, performance, and resilience into a competitive edge.

Proprietary and copyrighted material of IronSDN, Corp. ©️ Copyright 2022. All rights reserved.

For more information, visit www.WhiteHaX.com